Program 9: Logistic Taxi

CSci 39542: Introduction to Data Science

Department of Computer Science

Hunter College, City University of New York

Spring 2022

Classwork Quizzes Homework Project

Program Description

Program 9: Logistic Taxis. Due noon, Thursday, 7 April.

As in Program 8, this program is tailored to the NYC OpenData Yellow Taxi Trip Data and follows standard strategy for data cleaning and model building:

In your program, include the following functions from Program 8. You may use your earlier functions or the Program 8 solution available on Blackboard:

For example, let's start by setting up a DataFrame, as we did in Program 8, with the file, taxi_4July2020.csv, add in the tip and time features, and imputing missing values for which prints out the new columns:

We can add the indicators for if a toll was paid and if the trip started and ended in different boroughs:

Let's explore the data some:

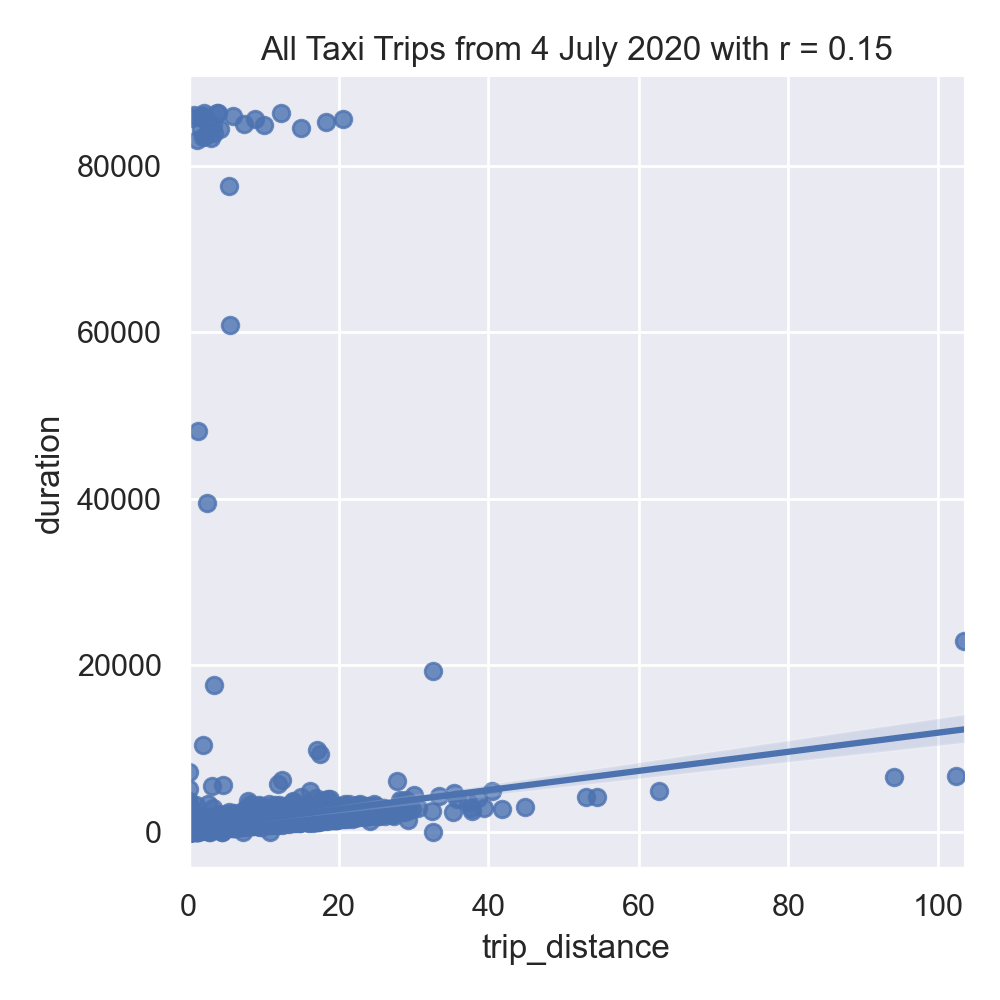

The resulting plot:

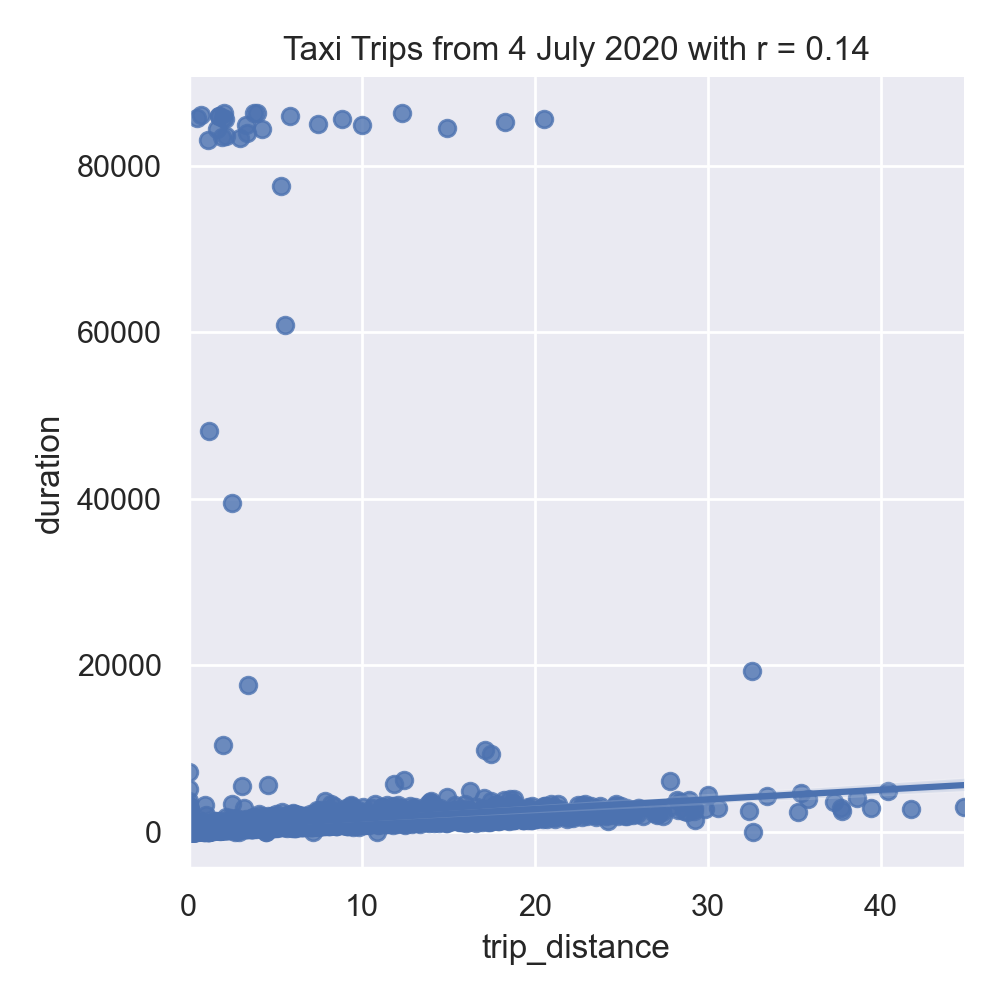

There are some extremely long trips in there-- some over 100 miles. To focus on trips that stay within the city, let's limit our data to trips that are less than 50 miles in distance, and explore the data by making scatter plots of some of the features:

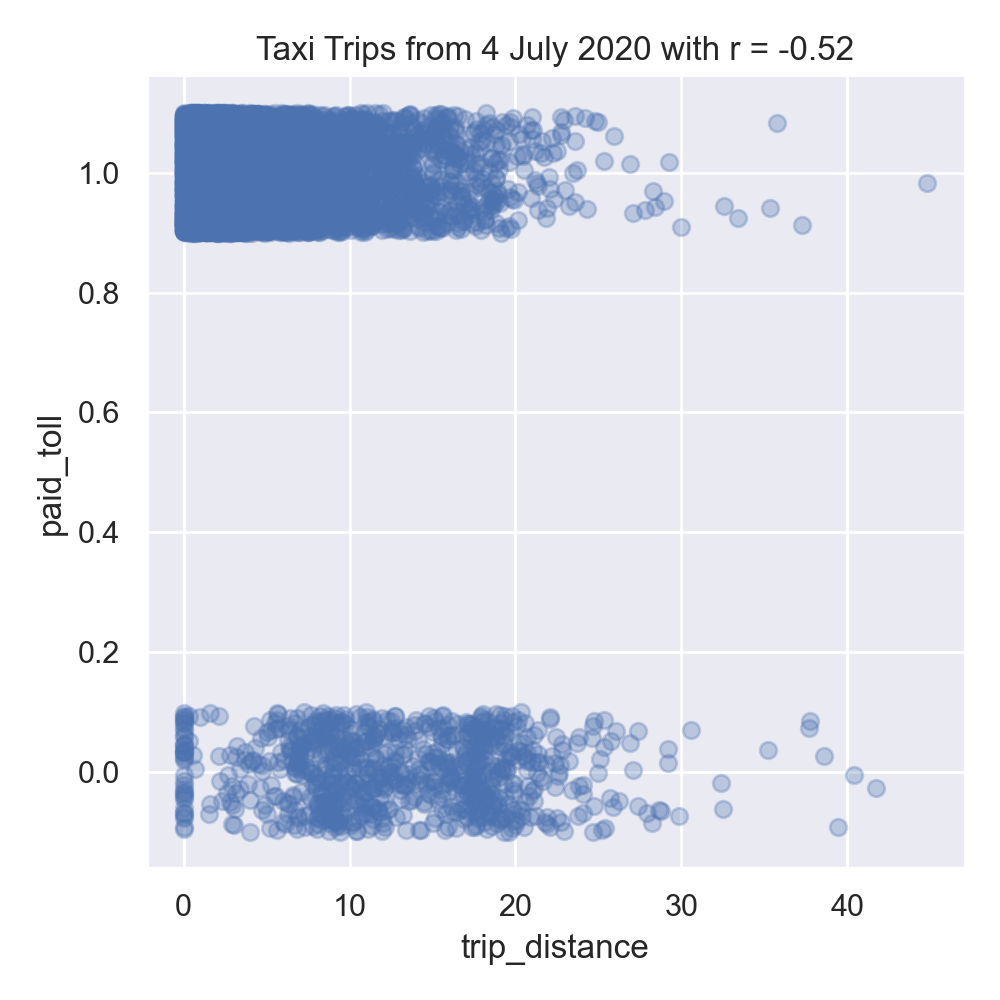

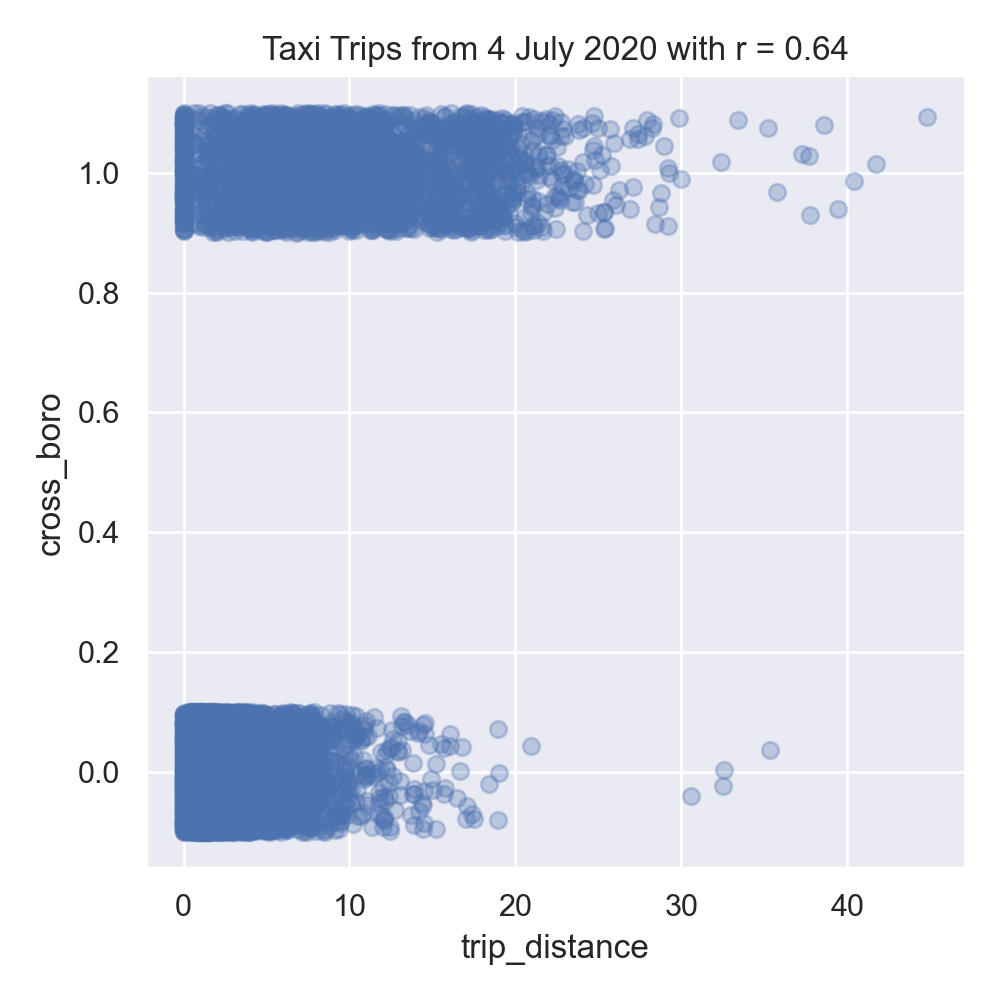

As discussed in Lecture 16 and Chapter 24, we added jitter to the y-values to better visualize the data since so much has similar values:

Interestingly, in our left image, the distance traveled and the duration of the trip are not strongly correlated. The middle image show negative correlation between trip distance and paying tolls. While the right images shows the trip distance positively correlated with trips that start and end in different boroughs.

Next, let's encode the categorical columns for pick up and drop off boroughs so we can use them as inputs for our model.

The first few lines of the resulting DataFrames:

Let's combine all the DataFrames into one (using concat along column axis):

The combined DataFrame has the columns:

Now, we're ready to fit some models to our data. We'll try first just a single independent variable, Our training set of 75% of the data, does well both on both training and testing. For this data, regularization does not significantly affect the results:

Let's use more of the numeric columns for a model, as well as different regularization approaches, and evaluate the results. We increased the number of iterations to allow the model to converge.

All of the models do better with the training subset than the testing subset. Adding regularization did not help the mean squared error, but showed some improvement for the r2 measure:

Learning Objective: to train and validate models, given quantitative and qualitative data, as well as assessing model quality.

Available Libraries: pandas, datetime, pickle, sklearn, and core Python 3.6+.

(Note if you use our annonations, you should from typing import Union.)

Data Sources: Yellow Taxi Trip Data and NYC Taxi Zones from OpenData NYC.

Sample Datasets: taxi_new_years_day_2020.csv,

taxi_4July2020.csv,

taxi_jfk_june2020.csv, and

taxi_zones.csv.

To identify which trips are most likely to cross between boroughs, this program will focus on building a logistic regression model on both the categorical and numerical features of our dataset.

The function specifications are below:

And write the following new functions:

import_data(file_name) -> pd.DataFrame:

This function takes as one input parameter:

The data in the file is read into a DataFrame, and the resulting DataFrame is returned. file_name: the name of a CSV file containing Yellow Taxi Trip Data from OpenData NYC.

(Note this is identical to the function with the same name in Program 8. You may use your earlier function or the Program 8 solution available on Blackboard.)

add_tip_time_features(df) -> pd.DataFrame:

This function takes one input:

The function computes 3 new columns:

df: a DataFrame containing Yellow Taxi Trip Data from OpenData NYC.

The original DataFrame with these additional three columns is returned.

percent_tip: which is 100*tip_amount/(total_amount-tip_amount)

duration: the time the trip took in seconds.

dayofweek: the day of the week that the trip started, represented as 0 for Monday, 1 for Tuesday, ... 6 for Sunday.

(Note this is identical to the function with the same name in Program 8. You may use your earlier function or the Program 8 solution available on Blackboard.)

impute_numeric_cols(df, x_num_cols) -> pd.DataFrame:

This function takes two inputs:

Missing data in the columns df: a DataFrame containing Yellow Taxi Trip Data from OpenData NYC.

x_num_cols: a list of numerical columns in df.

x_num_cols are replaced with the median of the column. Returns a DataFrame containing only the imputed numerical columns from input df.

(Note this is identical to the function with the same name in Program 8. You may use your earlier function or the Program 8 solution available on Blackboard.)

add_boro(df, file_name) -> pd.DataFrame:

This function takes as two input parameters:

Makes a DataFrame, using df: a DataFrame containing Yellow Taxi Trip Data from OpenData NYC.

file_name: the name of a CSV file containing NYC Taxi Zones from OpenData NYC.

file_name, to add pick up and drop off boroughs to df.

In particular, adds two new columns to the df:

Returns PU_borough that contain the borough corresponding to the pick up taxi zone ID (stored in PULocationID), and

DO_borough that contain the borough corresponding to the drop off taxi zone (stored in DOLocationID)

df with these two additional columns (PU_borough and DO_borough).

add_flags(df) -> pd.DataFrame:

This function takes one input parameter:

Adds two new columns:

df: a DataFrame containing Yellow Taxi Trip Data from OpenData NYC to which add_boro() has been applied.

Returns paid_toll which is 1 if a toll was paid on the trip and 0 in no tolls were paid.

cross_boro which is 1 if the trip started and ended in different borough, and 0 if the trip started and ended in the same borough.

df with these two additional columns (paid_toll and cross_boro).

encode_categorical_col(col,prefix) -> pd.DataFrame:

This function takes two input parameters:

Takes a column of categorical data and uses categorical encoding to create a new DataFrame with the k-1 columns, where k is the number of different nomial values for the column. Your function should create k columns, one for each value, labels by the prefix concatenated with the value. The columns should be sorted and the DataFrame restricted to the first k-1 columns returned. For example, if the column contains values: 'Bronx', 'Brooklyn', 'Manhattan', 'Queens', and 'Staten Island', and the col: a column of categorical data.

prefix: a prefix to use for the labels of the resulting columns.

prefix parameter has the value 'PU_' (and set the separators to be the empty string: prefix_sep=''), then the resulting columns would be labeled: 'PU_Bronx', 'PU_Brooklyn', 'PU_Manhattan', 'PU_Queens', and 'PU_Staten Island'. The last one alphabetically is dropped (in this example, 'PU_Staten Island') and the DataFrame restricted to the first k-1 columns is returned. Note: we presented several different ways to categorically encode nomial data in Lecture 14. The book details an approach using sklearn in Chapter 20,

and you may find Panda's get_dummies useful.

split_test_train(df, xes_col_names, y_col_name,

test_size=0.25, random_state=12345) -> Union[pd.DataFrame, pd.DataFrame, pd.Series(), pd.Series()]:

This function takes 5 input parameters:

Calls sklearn's train_test_split function to split the data set into a training and testing subsets: x_train, x_test, y_train, y_test. The resulting 4 subsets are returned.df: a DataFrame containing Yellow Taxi Trip Data from OpenData NYC to which add_boro() has been applied.

y_col_name: the name of the column of the dependent variable.

xes_col_names: a list of columns that contain the independent variables.

test_size: accepts a float between 0 and 1 and represents the proportion of the data set to use for training. This parameter has a default value of 0.25.

random_state: Used as a seed to the randomization. This parameter has a default value of 12345.

Hint: see the examples for Program 8 for a similar splitting of data into training and testing datasets.

fit_logistic_regression(x_train, y_train,penalty='none',max_iter=1000) -> object:

This function takes four input parameter:

Fits a logistic regression model to the x_train: the indepenent variable(s) for the analysis.

y_train: the dependent variable for the analysis.

penalty: the type of regularization applied. The default value for this parameter is 'none'.

max_iter: number of iterations allowed when fitting model. The default value for this parameter is 1000.

x_train and

y_train data, using the logistic model from sklearn.linear_model. The model should use the solver = 'saga' to allow all the options for regularization (called penalty as the option to the model) be any of 'elasticnet', 'l1',

'l2', and 'none').

The parameter max_iter should also be used when fitting the model.

The resulting model should be returned as bytestream, using pickle.

predict_using_trained_model(mod_pkl, x, y) -> Union[float, float]:

This function takes three inputs:

Computes and returns the mean squared error and r2 score between the values predicted by the model (mod_pkl: a trained model for the data, stored in pickle format.

x: an array or DataFrame of numeric columns with no null values.

y: an array or DataFrame of numeric columns with no null values.

mod on x) and the actual values (y). Note that sklearn.metrics contains two functions that may be of use: mean_squared_error and r2_score.

passenger_count:

Next, let's use our new functions to add in boroughs for the pick up and drop off locations:

df = import_data('taxi_4July2020.csv')

df = add_tip_time_features(df)

df['passenger_count'] = impute_numeric_cols(df,['passenger_count'])

df = add_boro(df,'taxi_zones.csv')

print('\nThe locations and borough columns:')

print(f"{df[['PULocationID','PU_borough','DOLocationID','DO_borough']]}")The locations and borough columns:

PULocationID PU_borough DOLocationID DO_borough

0 68 Manhattan 170 Manhattan

1 48 Manhattan 239 Manhattan

2 142 Manhattan 264 NaN

3 48 Manhattan 68 Manhattan

4 186 Manhattan 79 Manhattan

... ... ... ... ...

168930 138 Queens 231 Manhattan

168931 90 Manhattan 244 Manhattan

168932 229 Manhattan 140 Manhattan

168933 138 Queens 143 Manhattan

168934 132 Queens 25 Brooklyn

[168935 rows x 4 columns]

prints:

df = add_flags(df)

print(df[['trip_distance','PU_borough','DO_borough','paid_toll','cross_boro']])

trip_distance PU_borough DO_borough paid_toll cross_boro

0 2.20 Manhattan Manhattan 0 0

1 1.43 Manhattan Manhattan 0 0

2 1.74 Manhattan NaN 0 1

3 1.35 Manhattan Manhattan 0 0

4 2.33 Manhattan Manhattan 0 0

... ... ... ... ... ...

168930 9.28 Queens Manhattan 0 1

168931 9.10 Manhattan Manhattan 0 0

168932 0.80 Manhattan Manhattan 0 0

168933 9.55 Queens Manhattan 1 1

168934 18.72 Queens Brooklyn 0 1

[168935 rows x 5 columns]

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_theme(color_codes=True)

sns.lmplot(x="trip_distance", y="duration", data=df)

tot_r = df['trip_distance'].corr(df['duration'])

plt.title(f'All Taxi Trips from 4 July 2020 with r = {tot_r:.2f}')

plt.tight_layout() #for nicer margins

plt.show()

df = df[df['trip_distance'] < 50]

sns.lmplot(x="trip_distance", y="duration", data=df)

tot_r = df['trip_distance'].corr(df['duration'])

plt.title(f'Taxi Trips from 4 July 2020 with r = {tot_r:.2f}')

plt.tight_layout() #for nicer margins

plt.show()

sns.lmplot(x="trip_distance", y="paid_toll", data=df,fit_reg=False,y_jitter=0.1,

scatter_kws={'alpha': 0.3})

dist_r = df['trip_distance'].corr(df['paid_toll'])

plt.title(f'Taxi Trips from 4 July 2020 with r = {dist_r:.2f}')

plt.tight_layout() #for nicer margins

plt.show()

sns.lmplot(x="trip_distance", y="cross_boro", data=df,fit_reg=False,y_jitter=0.1,

scatter_kws={'alpha': 0.3})

dist_r = df['trip_distance'].corr(df['cross_boro'])

plt.title(f'Taxi Trips from 4 July 2020 with r = {dist_r:.2f}')

plt.tight_layout() #for nicer margins

plt.show()

df_pu = encode_categorical_col(df['PU_borough'],'PU_')

print(df_pu.head())

df_do = encode_categorical_col(df['DO_borough'],'DO_')

print(df_do.head()) PU_Bronx PU_Brooklyn DO_EWR PU_Manhattan PU_Queens

0 0 0 0 1 0

1 0 0 0 1 0

2 0 0 0 1 0

3 0 0 0 1 0

4 0 0 0 1 0

DO_Bronx DO_Brooklyn DO_EWR DO_Manhattan DO_Queens

0 0 0 0 1 0

1 0 0 0 1 0

2 0 0 0 0 0

3 0 0 0 1 0

4 0 0 0 1 0

df_all = pd.concat( [df,df_pu,df_do], axis=1)

print(f'The combined DataFrame has columns: {df_all.columns}')

For the taxi data, there is a special zone for trips to Newark Airport, and as such we have a drop off borough location of 'DO_EWR'.

We'll focus on the numeric columns, split our data into training and testing data sets:

The combined DataFrame has columns:

Index(['VendorID', 'tpep_pickup_datetime', 'tpep_dropoff_datetime',

'passenger_count', 'trip_distance', 'RatecodeID', 'store_and_fwd_flag',

'PULocationID', 'DOLocationID', 'payment_type', 'fare_amount', 'extra',

'mta_tax', 'tip_amount', 'tolls_amount', 'improvement_surcharge',

'total_amount', 'congestion_surcharge', 'percent_tip', 'duration',

'dayofweek', 'DO_borough', 'PU_borough', 'paid_toll', 'cross_boro',

'PU_Bronx', 'PU_Brooklyn', 'PU_EWR', 'PU_Manhattan', 'PU_Queens',

'DO_Bronx', 'DO_Brooklyn', 'DO_EWR', 'DO_Manhattan', 'DO_Queens'],

dtype='object')x_col_names = ['passenger_count', 'trip_distance', 'RatecodeID', 'PULocationID',

'DOLocationID', 'payment_type', 'fare_amount', 'extra', 'mta_tax',

'tip_amount', 'tolls_amount', 'improvement_surcharge', 'total_amount',

'congestion_surcharge', 'percent_tip', 'duration', 'dayofweek',

'paid_toll', 'PU_Bronx', 'PU_Brooklyn', 'PU_Manhattan', 'PU_Queens',

'DO_Bronx', 'DO_Brooklyn', 'DO_EWR', 'DO_Manhattan', 'DO_Queens']

y_col_name = 'cross_boro'

x_train, x_test, y_train, y_test = split_test_train(df_all, x_col_names, y_col_name)

trip_distance, and build a logistic model without regularization, to predict when trips start in one borough and end in another (when cross_boro is 1):

for p in ['none','l1','l2']:

print(f'Fitting a model with regression = {p}:')

mod = fit_logistic_regression(x_train[['trip_distance']],y_train,penalty=p)

mse_tr, r2_tr = predict_using_trained_model(mod,x_train[['trip_distance']],y_train)

print(f'\ttraining data: mean squared error = {mse_tr:8.8} and r2 = {r2_tr:4.4}.')

mse_val, r2_val = predict_using_trained_model(mod,x_test[['trip_distance']],y_test)

print(f'\ttesting data: mean squared error = {mse_val:8.8} and r2 = {r2_val:4.4}.')Fitting a model with regression = none:

training data: mean squared error = 0.08759994 and r2 = 0.3548.

testing data: mean squared error = 0.087015201 and r2 = 0.3617.

Fitting a model with regression = l1:

training data: mean squared error = 0.087663081 and r2 = 0.3543.

testing data: mean squared error = 0.087038879 and r2 = 0.3616.

Fitting a model with regression = l2:

training data: mean squared error = 0.08759994 and r2 = 0.3548.

testing data: mean squared error = 0.087015201 and r2 = 0.3617.x_cols = ['trip_distance','dayofweek','paid_toll', 'PU_Bronx', 'PU_Brooklyn','PU_Manhattan', 'PU_Queens']

print(f'For independent variables: {x_cols}:')

for p in ['none','l1','l2']:

print(f'Fitting a model with regression = {p}:')

mod = fit_logistic_regression(x_train[x_cols],y_train,penalty=p,max_iter=2000)

mse_tr, r2_tr = predict_using_trained_model(mod,x_train[x_cols],y_train)

print(f'\ttraining data: mean squared error = {mse_tr:8.8} and r2 = {r2_tr:4.4}.')

mse_val, r2_val = predict_using_trained_model(mod,x_test[x_cols],y_test)

print(f'\ttesting data: mean squared error = {mse_val:8.8} and r2 = {r2_val:4.4}.')

For independent variables:

['trip_distance', 'dayofweek', 'paid_toll', 'PU_Bronx', 'PU_Brooklyn', 'PU_Manhattan', 'PU_Queens']:

Fitting a model with regression = none:

training data: mean squared error = 0.072974957 and r2 = 0.4625.

testing data: mean squared error = 0.072003599 and r2 = 0.4719.

Fitting a model with regression = l1:

training data: mean squared error = 0.072974957 and r2 = 0.4625.

testing data: mean squared error = 0.072003599 and r2 = 0.4719.

Fitting a model with regression = l2:

training data: mean squared error = 0.072959172 and r2 = 0.4626.

testing data: mean squared error = 0.071956244 and r2 = 0.4722.